Teaming and MPIO for connecting to iSCSI storage has been a confusing issue for a very long time. With native operating system support for teaming and the introduction of SMB 3.0 shares in Windows/Hyper-V Server 2012, it all got murkier. In this article, I’ll do what I can to clear it up.

Teaming

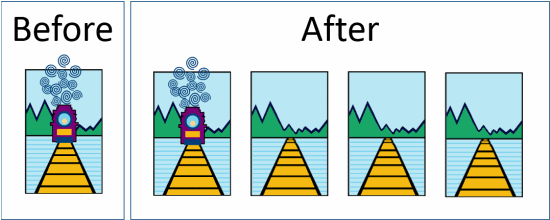

The absolute first thing I feel is necessary is to explain what teaming really does. We’re going to look at a completely different profession: train management. Today, we’re going to meet John. John’s job is to manage train traffic from Springfield to Centerville. John notices that the 4 o’clock regular takes 55 minutes to make the trip. John thinks it should take less time. So, John orders the building of three new tracks. On the day after completion, the 4 o’clock regular takes…. can you guess it?… 55 minutes to make the trip. Let’s see a picture:

Well, gee, I guess you can’t make a train go faster by adding tracks. By the same token, you can’t make a TCP stream go faster by adding paths. What John can now do is have up to four 4 o’clock regulars all travel simultaneously.

iSCSI and Teaming

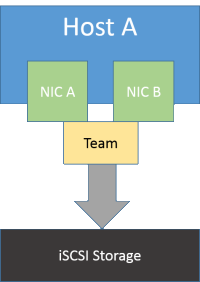

In its default configuration, iSCSI only uses one stream to connect to storage. If you put it on a teamed interface, it uses one path. Also, putting iSCSI on a team has been known to cause problems, which is why it’s not supported. So, you cannot do this:

Well, OK, you can do it. But then you’d be John. And you’d have periodic wrecks. What you want to do instead is configure multi-path (MPIO) in the host. That will get you load-balancing, link-aggregation, and fault-tolerance for your connection to storage, all in one checkbox. It’s a built-in component for Windows/Hyper-V Server at least as early as 2008, and probably earlier. I wrote up how to do this on my own blog back in 2011. The process is relatively unchanged for 2012. Your iSCSI device or software target may have its own rules for how iSCSI initiators connect to it, so make sure that it can support MPIO and that you connect to it the way the manufacturer intends. For the Microsoft iSCSI target, all you have to do is provide a host with multiple IPs and have your MPIO client connect to each of them. For many other devices, you team the device’s adapters and make one connection per initiator IP to a single target IP. Teaming at the target usually works out, although it is possible that it won’t load-balance well.

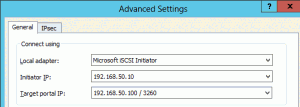

To establish the connection from my Hyper-V/Windows Server unit, I go into iscsicpl.exe and go through the discovery process. Then I highlight an inactive target and use “Properties” and “Add Session” instead of “Connect”. I check “Enable multi-path” and “Advanced”. Then I manually enter all IP information:

I then repeat it for other initiator IPs and target IPs, one-to-one if the target has multiple IPs or many-to-one if it doesn’t. In some cases, the initiator can work with the portal to automatically figure out connections, so you might be able to get away with just using the default initiator and the IP of the target, but this doesn’t seem to behave consistently and I like to be certain.

Teaming and SMB 3.0

SMB 3.0 has the same issue as iSCSI. The cool thing is that the solution is much simpler. I read somewhere that for SMB 3.0 multi-channel to work, all the adapters have to be in unique subnets. In my testing, this isn’t true. You open up a share point on a multi-homed server running SMB 3.0 from a multi-homed client running SMB 3.0 and SMB 3.0 just magically figures out what to do. You can’t use teaming for it on either end, though. Well, again, you can, but you’ll be John. Don’t be John. Unless your name is really John, in which case I apologize for not picking a different random name.

But What About the Virtual Switch?

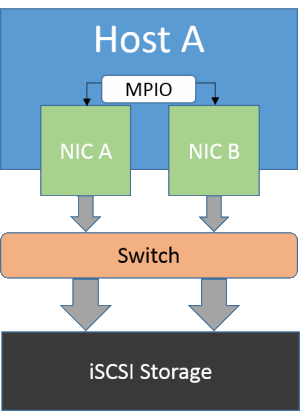

Here’s where I tend to lose people. Fasten your seat belts and hang on to something: you can use SMB 3.0 and MPIO over converged fabric. “But, wait!” you say, “That’s teaming! And you said teaming was not supported for any of this!” All true. Except that there’s a switch in the middle here and that makes it a supported configuration. Consider this:

This is a completely working configuration. In fact, it’s what most of us have been doing for years. So, what if the switch happens to be virtual? Does that change anything? Well, you probably have to add a physical switch in front of the final storage destination, but that doesn’t make it unsupported.

Making MPIO and SMB 3.0 Multichannel Work Over Converged Fabric

The trick here is that you must use multiple virtual adapters the same way that you would use multiple physical adapters. They need their own IPs. If it’s iSCSI, you have to go through all the same steps of setting up MPIO. When you’re done, your virtual setup on your converged fabric must look pretty much exactly like a physical setup would. And, of course, your converged fabric must have enough physical adapters to support the number of virtual adapters you placed on it.

Of course, this does depend on a few factors all working. Your teaming method has to make it work and your physical switch has to cooperate. My virtual switch is currently in Hyper-V Transport mode and both MPIO and SMB 3.0 multi-channel appear to be working fine. If you aren’t so lucky, then you’ll have to break your team and use regular MPIO and SMB 3.0 multichannel the old-fashioned way.

There is one other consideration for SMB 3.0 multichannel. The virtual adapters do not support RSS, which can reportedly accelerate SMB communication by as much as 10%. But…

Have Reasonable Performance Expectations

In my experience, people’s storage doesn’t go nearly as fast as they think it does. Furthermore, most people aren’t demanding as much of it as they think they are. Finally, it seems like a lot of people dramatically overestimate what sort of performance is needed in order to be acceptable. I’ve noticed that when I challenge people that are arguing with me to show me real-world performance metrics, they are very reluctant to show anything except file copies and stress-test results and edge-case anecdotes. It’s almost like people don’t want to find out what their systems are actually doing.

So, if I had only four NICs in a clustered Hyper-V Server system and my storage target was on SMB 3.0 and it had two or more NICs, I would be more inclined to converge all four NICs in my Hyper-V host and lose the power of RSS than to squeeze management, Live Migration, cluster communications, and VM traffic onto only two NICs. I gain superior overall load-balancing and redundancy at the expense of a 10% or less performance boost that I would have been unlikely to notice in general production anyway. It’s a good trade. Now, if that same host had six NICs, I would dedicate two of them to SMB 3.0.